XML Framework for Score Data

Overview

Score files hold notes, contour segments (ramps) and other details specific to musical passage. There are two score-file formats:

- Simple scores export well to MUSIC-N and to MIDI but contain insufficient information for MusicXML export. Simple scores have no measures, which means there's no fussing about whether musical lines fit neatly into metric time spans. Ties and tuplets are neither present nor necessary because note timings may employ any positive ratio. Since note succession is meaningless, note periods and note durations come down to the same thing.

- Measured scores are distinguished from simple scores by the presence of measures, bracketed tuplets, ties, neumes, dots, with all the constraints that these entail. In a measured score, notes have predecessors and successors, and the time-period separating one note's start time from it's successor's start time must be expressible as a neume (whole note, half note, quarter note, etc.) with some number of dots. If a voice holds a tone over a bar line, you have to break the tone up into consecutive notes bound by ties. If you want other than duple rhythmic divisions, you must bracket notes in tuplets.

I have employed both the simple and measured formats in the latest version of a composing program I have developed for Petr Kotik This program is based on Markov chains, and among other features can generate two separate chains, one controlling rhythm and the second controlling pitch. Each result is stored on disk using the simple file format. To produce the combined result, two files are read in and the pitches of the second file are imposed upon the durations of the first file. The result is initially also in the simple format, but my framework has the rudiamentary (duple rhythms only) ability to impose a meter upon a simple score. The result thus processed then exports to MusicXML for further manipulation by Kotik using finale.

score file with a duration of eight quarter notes.

The XML schema specified in score.xsd applies both to simple and measured scores.

The XML excerpt presented as Listing 10 blocks out the structure of the score construct.

The listing shows two score properties and three element groupings.

The score properties startTime and endTime, are both expressed as ratios relative to the whole note; thus

this particular ‘score’ starts at time 0 (expressed using the ratio 0:1) and lasts for two quarters.

The score document element has ensemble, groups, and events components.

We shall flesh out each of these components in turn.

ensemble mapping reflects the ensemble detailed previously, which declared four voices (see Listing 4),

four contours (three “Velocity” and one “PitchBend”; Listing 5), and five instruments (see Listing 6).

Score-Ensemble Mapping

The ensemble mapping component is illustrated by Listing 11.

This component exists simply to enable consistency checks between a score file and its associated ensemble file.

The ensemble block contains three types of entity reference: voice, instrument, and contour.

If any entity identified in here in the score is undefined by the ensemble, or if any entity defined in the ensemble is unidentified here in the score, then

a load error is thrown.

Notes, Measures, and Tuplets

Before treating the groups and events sections in detail, it will be helpful

to provide an example showing how elements coordinate between these two sections.

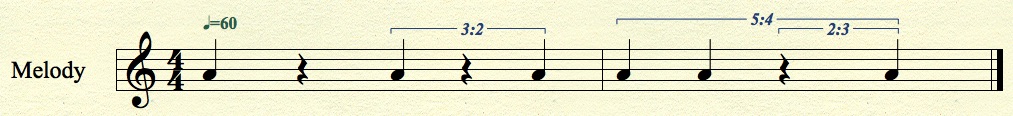

Figure 1 presents the goal of this example, a rhythmic sequence rendered using finale.

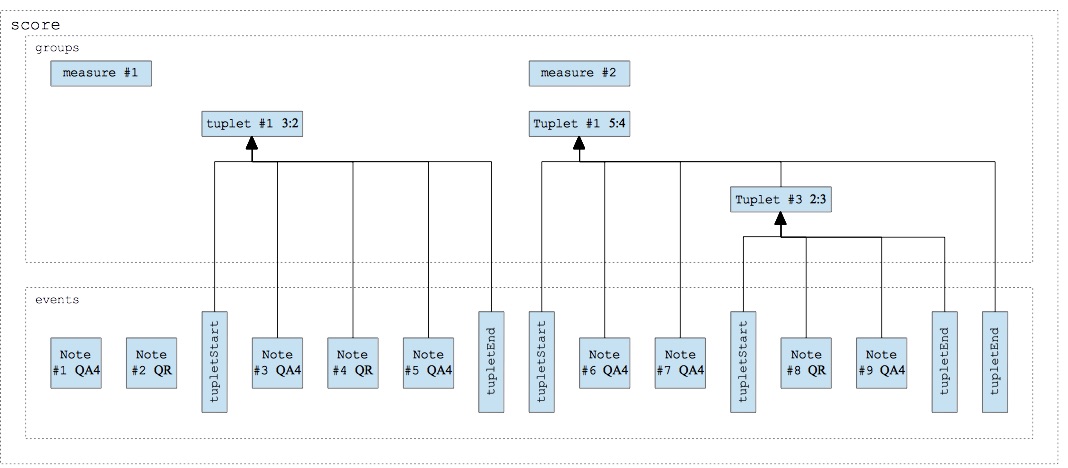

Listing 12 presents the XML-coded structure, which is relationally diagrammed by Figure 2.

Notice that groups contains two measure elements and three tuplet elements.

Each tuplet group is supported by paired tupletStart and tupletEnd events —

one pair for each voice that participates in the tuplet.

Notice also that sounding tones and rests are both indicated using note events, and that you can distinguish

one from the other by inspecting each note's onsetPitch property.

The present example illustrates the measured score format. Had the alternative simple

format been employed, the groups area would have contained neither measure nor tuplet elements.

Also, in place of the notatedDuration property, the note would substitute a period

ratio, which would not necessarily be duple. Also there would be no need for rests.

| Group Type | Description |

|---|---|

segment | Start time supported by segmentStart event; end time supported by

segmentEndevent; origin and goal. |

measure | Start time, end time, time signature, key signature, rate, pickup flag. |

chord | Start time supported by chordStart event; end time supported by

chordEndevent; Notes within a chord share the same start time and period. |

tuplet | Start time supported by tupletStart event; end time supported by

tupletEndevent; tuplet rate; enclosing tuplet (if any). |

slur | Slurs convert into MUSIC-N as slur-from note references and into MusicXML as slur-markings. |

phrase | The purpose of a phrase is user driven. |

groups element.

Score Groups

The groups component of a score file holds elements that affect groups of notes.

Table 1 lists supported group types.

Listing 13 illustrates the XML text format.

The first and second elements in Listing 13 describe how tempo (contour #0) and velocity (contour #1) behave over time. By convention, contour #0 always controls tempo, so the segment element for contour #0, index #0 indicates that the tempo should hold steady at 1 whole note per second from time 0 (expressed using the ratio 0:1) to time ∞ (infinity). The fact that contour #1 controls velocity for voice #1 “Melody” is established Listing 5.

The third and fourth elements in Listing 13 identify chords; that is, collections of notes which play simultaneously.

Following the practice earlier described for tuplets, each chord is supported by paired chordStart

and chordStart events, one pair for each voice that participates in the chord.

events element.

Score Events

The

Events share the following attributes in common:

In addition to a voice identifier, all score events have a time and a duration.

Earlier versions of my score architecture represented times and durations using floating-point numbers, but in 2012 I made the decision to

express all times and durations as ratios. The ratio class has been described elsewhere on this site in connection

with the musical calculator, and it has the feature that numerators and denominators

are always reduced to simplest terms. Meaning that if you ask for the ratio of 6 to 8, the framework will

give you back the ratio of 3 to 4.

When music is notated in binary divisions of the whole note — using dots and ties to create compounds

and using tuplet brackets to indicate non-binary divisions — then every duration thus derived must necessarily be related to the whole

note by a ratio of two integers (likewise to the whole note, which is what unity stands for in my architecture).

And when durations are not rational then there is always a close rational approximation.

The previous paragraph argued the feasibility of using ratios to represent time and duration, but ratios also have

positive advantages:

A score organizes its collection of events into time order.

If two events start at the same time, then the sort order proceeds according to Table 2.

Everything else held equal, the event's group id breaks the tie.

The events of a file created using my score API will adhere to this sort order. However score files intended for input are not required to

be pre-sorted; the API takes care of that.

While my score framework accepts only timings expressed in ratios, it seems that certain transitions should be timed in an absolute way.

Remember transition times in speech synthesis: 300 msec. for a diphthong, 100 msec. for a glide, and 50 msec for a plosive.

The resolution to this challenge is illustrated by the call to method

Here follows a blow-by-blow description of what Listing #1 does to flesh out the content of events grouping holds timed activity which applies to specific voices.

The XML excerpt provided in Listing 14 presents examples of segment-start events, chord-start events, notes, chord-end events, and segment-end events.

The full list of event types is listed in

groups data.

For example, the group id for tupletStart and tupletEnd events references a particular tuplet

declared under groups.

Event Times and Durations

Having no rounding error makes it easier to verify that a sequence of durations properly fills out a measure. The API

for my score architecture performs no such check, since it does not require notes to be grouped into measures. However

the Ashton score-generating engine, which converts character-encoded scores into my format, does perform such a check.

This second advantage of ratios becomes useful when exporting into MusicXML. Each MusicXML measure has, as one of its properties,

the number of divisions of a quarter note. Thus a 4/4 measure containing a mixture of eighth notes and eighth-note triplets

would have 6 divisions per quarter note, where a regular eighth-note would have duration 3 and an eighth note inside a triplet

would have duration 2.

Table 2: Score event types, arranged by sort order.

chordEndtupletEndsegmentEndclefChangeharpPedallingChangenote without pitch (rest)note with pitchsegmentStarttupletStartchordStartEvent Sequencing

Absolute to Relative Durations

001 Pitch C4 = Pitch.unison();

002 Ratio time = Ratio.ZERO;

003 Ratio quarter = Ratio.UNITY;

004 Ratio half = quarter.multiply(2);

005 tempoContour.createOrigin(time, 2.);

006 peak1Contour.createOrigin(time, spectrumX.getPeak1());

007 time = time.add(quarter);

008 Ratio transition = score.ratioBefore(time, 0.1);

009 time = time.subtract(transition);

010 peak1Contour.createGoal(time, transition, spectrumY.getPeak1());

011 time = time.add(quarter).subtract(transition);

012 peak1Contour.createOrigin(time, spectrumY.getPeak1());

013 time = Ratio.ZERO;

014 score.addNote(voice.getID(), time, half, half, instrument.getID(), C4, 0);

ratioBefore() in line #8 of

Listing 15. This code has been highly abridged; it is sufficient for

you to know that the variable score is a Score instance,

that the variables tempoContour and peak1Contour dereference contours

held within score, and that the variables spectrumX and spectrumY are objects

with a property called “peak1” that specifies the center frequency of a spectral peak.

The unabridged code would instantiate contours for each peak or trough in the spectrum; however including those would simply

clutter things up. Understand that whatever I do to peak1Contour in Listing #1 would

also be done to peak2Contour, trough5Contour, and so forth.

peak1Contour.

This narrative is provided in service of showing how ratioBefore() operates in context:

createOrigin() fixes the tempo at 2 quarter notes per

second (♩=120) from time 0 on through eternity.

createOrigin() in line #6 fixes the value of peak1Contour at spectrumX.getPeak1()

from time 0 on through eternity.

createGoal() in line #10 will generate a peak-1 transition from spectrumX to

spectrumY. However createGoal() needs to know when this transition

will start, and how long it will last.

spectrumY to come into its own one quarter note from the beginning of the passage.

This time value is calculated by line #7.

ratioBefore() in line #8 does two things:

Since the tempo (from line #5) is 2 quarters per second, the result of line #8 will be 0.1÷(0.5)=Ratio(1,5).

time off by the transition duration from the value attained in line #7.

createGoal() in line #10.

This method call truncates one segment and generates two more:

peak1Contour's initial segment so that instead of sustaining off to eternity,

the initial segment completes after Ratio(1,5) whole notes.

Ratio(1,5) (from argument #1) and lasting for

Ratio(1,20) whole notes (from argument #3)

The origin for this second segment is spectrumX.getPeak1(), picked up from the now-truncated initial segment.

The goal for this second segment is spectrumY.getPeak1() (from argument #3).

Ratio(1,1) (= argument #1 + argument #2).

This third segment sustains the value from argument #3 on through eternity.

More about Notes

The most essential of score events collection is, of course, the note. The

timing information for notes includes start time, period, and duration.

Period and duration are the same thing in simple scores.

In measured scores,

- The period spans the time interval between the current note's start time and the next note's start time, while

- The duration spans the time interval between the current note's start time and the current note's release time.

All three of these note timing attributes are represented using ratios. The period may employ any positive ratio in a simple score, but in a measured score these ratios are limited to denominators which are powers of two, and more specifically to values constructable using neumes and dots.

Ties between notes are indicated in memory using both tie-from and tie-to references; however only the tie-from reference saves to file. Ties may be applied only when the notes satisfy the following conditions:

- The release time (both start + duration and start + period) of the tie-from note matches the start time of the tie-to note.

- The tie-from note and tie-to note have matching voices.

- The tie-from note and tie-to note have matching instruments.

- The tie-from note and tie-to note have matching onset pitches (see below).

To obtain non-duple proportions, one must enclose notes in tuplet brackets. Figure 1 shows how the data elements are structured for a note enclosed within a tuplet, which tuplet is itself enclosed within a larger tuplet. The figure indicates that the note's period is a sixteenth without dots (ratio 1:4), that the ‘inside’ tuplet compresses three notes into the space of two (ratio 3:2), and that the ‘outside’ tuplet expands 5 notes into the space of 6. Suppose also that the measure overall has a neutral time-compression ratio (1:1). Then the adjusted note duration will be

| 1 4 |

× | 2 3 |

× | 6 5 |

× | 1 1 |

= | 12 60 |

= | 1 5 |

Measures can have time-compression ratios. My original Ashton score transcription accounted for time compression by permitting time signatures such as 4-20: four beats per measure and a twentieth of a whole note (four fifths of a sixteenth note) gets one beat. However since the MIDI and MusicXML coding conventions for time signatures only accept powers of two in the denominator, I was pushed to the alternative: present the time signature as 4-16 but indicate a relative tempo change of ♪♪♪♪♪♪=♪♪♪.

If a collection of note events is enclosed within a chord, then all the note start times must match

the chord start time, and the period of each note must match the period of the chord.

The same chord can hold notes from different voices, but if this happens each voice must have its own chordStart/chordEnd

pair.

This is necessary for both MIDI and MusicXML conversion because the event sequence is traversed separately for each MIDI track or for each MusicXML part,

and because voices are my score framework's internal counterpart for MIDI tracks and for MusicXML parts.

Notes in my score framework have two pitches, the first indicating the pitch as of the note's onset (start time) and the second indicating the pitch as of the note's release (end time). I decided to represent pitch in this way because I wanted to be able to indicate glisses; however since the framework has been implemented I have employed this feature only once, when I was trying to recreate Xenakis's stochastic music program. The two-pitch solution is suitable neither for MIDI, where glisses are produced using single notes with dynamic pitch bend, nor MusicXML, where portamentos connect consecutive notes, like ties.

The pitch construct used by my score framework is the same as the one described for the musical calculator: each pitch is described by its degree, it's octave (a digit from 0-9; 4 indicating the octave above middle C), and a cents inflection (a signed integer). A degree is described in turn by its letter names (A-G), and its accidental (double flat, flat, natural, sharp, double sharp). Rests are indicated using pitches with null degrees.

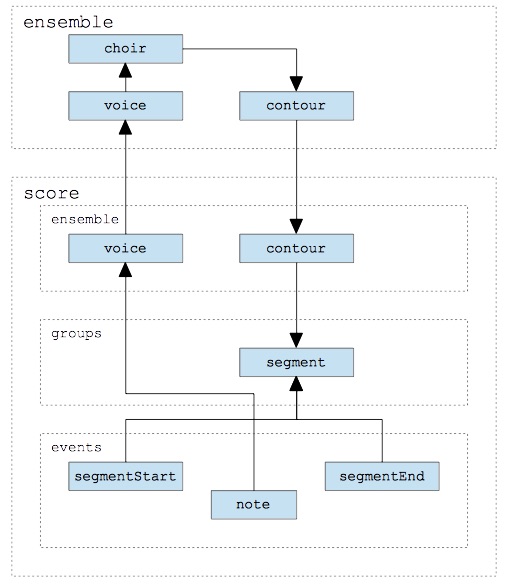

Like all other score events, notes are associated with voices. The voice provides vertical context for the note within the score. Among other things it contributes to the access path linking the note to its associated contours. This access path is illustrated in Figure 2.

Every voice should have a default instrument, but sometimes one voice may have several instruments, much as a percussion player may be asked to switch back and forth between marimba and xylophone. Thus each individual note directly references which of possibly many instruments as been chosen to play that particular note.

- Accent — A non-negative integer.

-

Phrase — If the note has membership in a phrase, this attribute will reference a

phraseelement in the score's groups data. - Slur-From Note — If the note participates in a slur, this attribute will reference the note's prececessor in the slur.

- Slur-To Note — If the note participates in a slur, this attribute will reference the note's successor in the slur.

- Comment — Stored text whose purpose is user-driven.

Extending the attributes listed thus far, these additional attributes are MusicXML specific:

- Beam — Beaming indicator for MusicXML export: BEGIN, CONTINUE, END, FORWARD_HOOK, BACKWORD_HOOK.

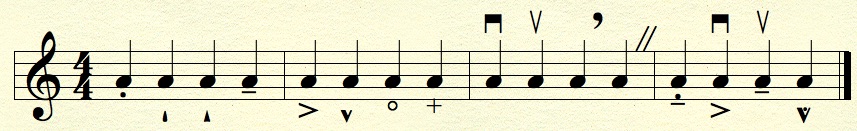

- Indication — A collection of MusicXML indications (e.g. sforzando, staccato) which should be applied to the note during MusicXML conversion.

- Staff ID — Used for MusicXML export when a note crosses to another staff.

Articulation

One of the design principles for this score framework was that representations should be neutral. However I was unable to achieve this with respect to the note-specific symbols used to indicate articulations, accents, bowings, and such. Examples of these are presented in Figure 3, which was rendered using finale from MusicXML generated by my framework. Listing 16 shows the source score file.

Note #14 through note #16 in Listing 16 illustrate multiple indications applied to the same note. For example, note #14 indicates a wedge accent played using a down bow.

My inability to achieve a neutral representation for note-specific indications was not for lack of thinking about the subject. I have made a study of how articulations and accents may be realized synthetically, which I intend to post shortly on this site. The challenge for me was that the symbols in Figure 3 evolved for live acoustic instruments and have imprecise synthetic realizations. Some of them, e.g. staccato and tenuto affect note durations and note-to-note spacing. Others, e.g. accent, might affect the momentary dynamic of a note, but alternatively might indicate spacing prior to the attack or an extended hold period in the envelope, just following the attack. Still others such as the little o and cross have timbral significance with interpretations as different as holding a toilet plunger open or closed, on a trumpet, or for whacking a high-hat with the cymbals apart or together.

Consider the indications which pertain specifically to note spacings: staccato, tenuto, staccatissimo. Leland Smith's SCORE program accomodated note spacings with a “duty factor” controlling how a note's sounding duration relates to the time-period between consecutive note attacks. Thus staccato might indicate a duty factor of 50% while tenuto might indicate 100%. But does this same staccato percentage apply equally to fast and slow tempi? And what about slurs? Like tenuto the duration of a slur-from note extends right up to the slur-to attack, but a slur goes farther than that. With a slur, the envelope begun during the from-note sustains through the to-note.

I'm coming into the opinion that traditional notation incorporates understanding that synthetic formats like MIDI and MUSIC-N do not express, at least not explicitly. It remains good design to store each musical fact once and to rely on interfaces to make the fact meaningful in each exported format; however for articulations it now seems to me that the discrete verbal indication should be the starting point. The interface should not be universal but rather should be custom configured according to how the sound is being produced.

Next topic: Exporting to External Formats

| © Charles Ames | Page created: 2013-10-16 | Last updated: 2015-09-15 |